In a recent New York Times Sunday Review article1, Clinton Leaf questioned the effectiveness of traditional clinical drug trials. He described how a study of a brain cancer treatment drug showed no difference, on average, in survival between patients given the drug and those given a placebo. But while some patients fared significantly better with the drug, the researchers were unable to discover who the drug actually helped and why because of the design of the clinical trial.

Showing how variability in the effectiveness of drugs can often go unstudied, Leaf asks, “Are the diseases of individuals so particular that testing experimental medicines in broad groups is doomed to create more frustration than knowledge?” We would add, specifically with respect to education, “Are the circumstances in which programs are implemented varied enough to compel some attention to context in the R&D process?” From our initial belief in the efficacy of approaching educational problems using the tools of improvement science, we have seen significant similarities between our work and that of those working in health care improvement. A similar and key question for us to ask is, “What works, for whom, and under what conditions?”

This is why Leaf’s article resonates so strongly for us. The problems that traditional clinical trials present to cancer researchers are much like those facing education researchers testing improvements in schools. In the same way that diseases can manifest themselves differently across patients, a change in two different districts, schools, or classrooms can lead to widely different results. An after-school program that works in an urban school might not be effective in a rural school. A new curriculum that is effective for students in one inner-city district might have very different results in another city, where English is not the first language for many students. Yet when judging the effectiveness of a program or widespread intervention, traditionally, evaluators

will just look to judge whether it generally “works.” But like the problem with clinical drug trials, an evaluation that tests only whether an intervention is successful on average does little to tell us under what conditions it does or does not work. And even if it is found that an intervention “works” on average, policymakers should not assume generalizability of that solution. Instead, political leaders and policymakers continue to take apparently “successful” interventions and try to bring them to scale across a wide range of schools. In doing so, they seek to maintain the fidelity of implementation of a program as it goes to scale. However, this does not allow for differences in local contexts, which instead would lead us to something better described as integrity of implementation2.

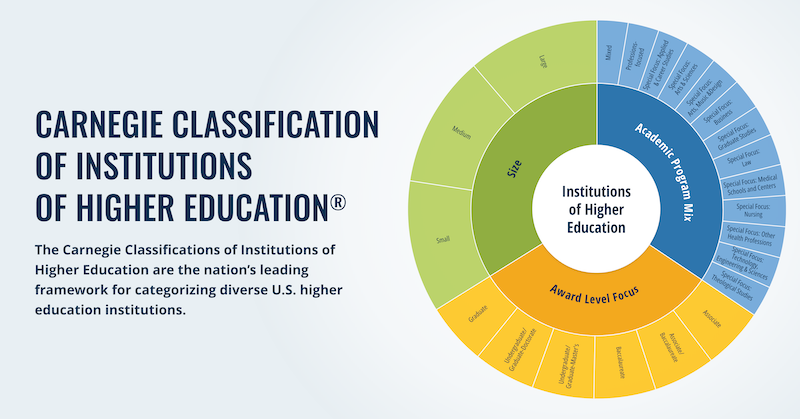

Carnegie argues for a third way of conceiving the education R&D enterprise, allowing for both adaptation to local contexts and the ability to scale a solution.

At Carnegie, we seek to help adapt interventions to the particular circumstances and needs of the individual practitioners that carry out the work, a form of disciplined testing that makes a change applicable to students from diverse backgrounds and with particular learning needs. To be clear, Carnegie does not advocate that every educator develop a different practice that works best for him or her. This method of change will inevitably lead to great variability in outcomes, as some teachers find successful practices and others do not. Instead, Carnegie argues for a third way of conceiving the education R&D enterprise, allowing for both adaptation to local contexts and also the ability to scale a solution.

Working in Networked Improvement Communities (NICs) of practitioners, designers, researchers, and users, we are able to adapt to local contexts and, through the organizational construct of networks, generalize and scale solutions. Improvement science is a formal method of continuous improvement that allows one to test a change on a small scale before scaling up, all the while learning how interventions change under different conditions. Through the use of common targets, a shared language, and common protocols for inquiry, practitioners can share with each other throughout a network in order to spread successful interventions.

The vehicle for this work is a model for small tests of prototyped changes called Plan-Do-Study-Act cycles (PDSAs). In running these tests, practitioners learn about the effect of an intervention and build warrant for it as the testing of adaptations continues. Initial tests take place on a small scale—one teacher in one classroom. The goal of the initial tests is just to learn whether the intervention works and to refine it. If the change idea is successful, it is then brought into different contexts to be tested—two teachers in different classrooms or a teacher in a different grade. Using this improvement tool, practitioners learn under what conditions the change idea works, allowing them to learn where it is and is not appropriate to bring to scale as well as how it can be adapted to different conditions.

Clinical trials teach us what can work, improvement science teaches us how to make it work.

In the New York Times piece, Leaf describes how new clinical trial methods are emerging that allow for changes to occur without stopping the study. Using data analytics to inform an experiment while it is still being run, doctors can make changes and incorporate their learning into the ongoing trial. In the same way that PDSAs allow learning and adaptations to occur within a practitioner’s work throughout the year or term, these clinical trials will adapt over time, allowing the researchers to understand which patients the drug works best for and make changes accordingly. Through these tests, they will learn a lot about the efficacy of the drug. Mark Gilbert, a professor of neuro-oncology at the University of Texas M.D. Anderson Cancer Center in Houston, offered this definition of a successful clinical trial: “At the end of the day…regardless of the result, you’ve learned something.” As we like to put it, clinical trials teach us what can work, improvement science teaches us how to make it work.

August 5, 2013

The scan explores K-12 credit policies in all 50-states and the District of Columbia to better understand which states define credit based solely on seat-time and which allow districts to define credit more flexibly.

August 22, 2013

The Carnegie Foundation's latest brief, Strategies for Enhancing the Impact of Post-observation Feedback for Teachers, examines the struggle to use post-observation conversations effectively to support and develop teachers.

Links

- http://www.nytimes.com/2013/07/14/opinion/sunday/do-clinical-trials-work.html

- http://commons.carnegiefoundation.org/what-we-are-learning/2011/what-we-need-in-education-is-more-integrity-and-less-fidelity-of-implementation/