Teacher evaluation has evolved markedly over the past four years. In 2013, it is more common than not that teacher performance evaluation is determined, at least in part, by student achievement, whereas this was not the case just four years ago.

This rapid evolution has been catalyzed in no small part by federal Race to the Top and Teacher Incentive Fund monies, as well as a national discourse that asserts that quality teaching must, by definition, raise student achievement. Unsurprisingly, consequent proliferation of evaluation systems has also yielded a great deal of variation in terms of system design, structure, and coherence.

This is the essence of the latest State of the States report published last month by the National Council on Teacher Quality (NCTQ): while most states now include measures of student achievement in teacher evaluations, not all states are connecting evaluation policies with other personnel decisions of consequence. The report compares states’ teacher evaluation policies and assesses the extent to which they are linked explicitly via legislation to 11 consequential decisions (i.e., tenure, professional development, improvement plans, public reporting of aggregate ratings, compensation, dismissal, layoffs, licensure advancement, licensure reciprocity, student teaching placements, and teacher preparation program accountability). According to Sandi Jacobs, vice president of NCTQ, “[S]ome states have a lot more details sorted out than others do” with regard to linking teacher evaluation – based mostly on student achievement – to these decisions.

The discussion recommends states use caution when “connecting the dots” between teacher evaluation and personnel decisions.

There are a number of implicit assumptions in this report that warrant exploration, not necessarily in response to the report itself, but rather in response to state action that is generally moving in this direction. Indeed, Louisiana has connected teacher evaluation policies to nine of the specified decisions (all but licensure reciprocity and student teaching placements), while legislation in Colorado, Delaware, Florida, Illinois, Michigan, Rhode Island, and Tennessee has connected evaluations to more than half of the decisions. The discussion here recommends that states use caution when “connecting the dots” between teacher evaluation and personnel decisions.

First, it is worthwhile to note that NCTQ is making an a priori assumption that tying teacher evaluation to the 11 personnel decisions specified in the report is a meaningful, productive, and efficient way to organize a teacher evaluation system. By extension, other systems not connected in this way are counterproductive and inefficient. The report does not offer evidence to support this claim, either in terms of increased student achievement or other measures of productivity (e.g., decreased teacher turnover). Related to this, state legislation is not synonymous with an evaluation system, a point recently made elsewhere by Rick Hess. A set of statutes may constitute a set of facilitating conditions under which an evaluation system is created, but the NCTQ report does not discuss how a state might do this. In this respect, the theory of change suggested by the NCTQ report is quite lengthy: from “connected” state legislation to a coherent evaluation system that elicits information about teachers that could inform personnel decisions, which may positively impact the teaching workforce, thereby enhancing the quality of teaching, which in turn may improve student outcomes.

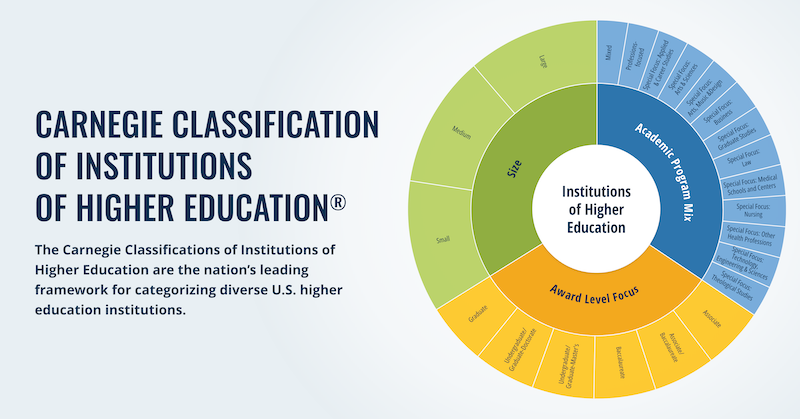

Second, the report assumes – based on other influential findings, such as those reported by the Measures of Effective Teaching Project – that teacher evaluation results should be composed of multiple measures, with student achievement as the chief determinant. But outside of a passing reference to one state (Rhode Island), there is no indication in the NCTQ report that the way in which these measures are combined matters at all. In fact, it does matter a lot, according to a recent brief by Carnegie Knowledge Network (CKN) panelist Doug Harris. Harris shows that while many states employ a weighted average approach to multiple measures resulting in a singular index of effectiveness, this approach is likely to be more costly than other approaches (i.e., all measures are needed for all teachers) and may diminish valuable nuance in the data. Further, he maintains that we should ultimately be concerned with the outcomes of the personnel decisions (i.e., teachers’ reactions to them) rather than the particular method by which they are made. However, Harris notes that this requires a “different kind of evidence” than is currently collected – that is, evidence is needed on the implementation of consequential decisions and measures are necessary to ascertain unintended and averse side-effects.

Third, the part of the NCTQ report most emphasized in a recent U.S. News article is the use of evaluation data to identify high-quality teacher preparation programs. The assumptions here are that: (1) some programs are more effective than others, and (2) teacher evaluation can elicit useful information against which teacher prep programs might be held accountable. Another Carnegie brief by Dan Goldhaber discusses issues related to the latter. According to Goldhaber, comparing individual teacher prep programs is tricky because “we cannot disentangle the value of a candidate’s selection to a program from her experience there.” In other words, some programs benefit from more rigorous selection criteria. Critically, Goldhaber argues that smaller teacher training programs are unlikely (due to standard errors and confidence intervals) to be statistically distinguishable from average. This would incentivize small programs to stay small unless they can drastically improve, and induce large programs to reduce the number of graduates unless they also can drastically improve. But because research into the aspects of teacher training that matter most for producing quality graduates is limited, drastic improvement would be difficult.

Fourth, and arguably most critically, the NCTQ report assumes that systems of evaluation and systems of improvement are one and the same, and that information elicited by the former can be used for the latter. This is not inherently true, though neither are the two types of systems mutually exclusive. A Carnegie policy brief on post-observation feedback for teachers by Jeannie Myung and Krissia Martinez illustrate this tension. Myung and Martinez find that while many districts have committed to collecting a large quantity of observational data, “the field still has a lot to learn about how best to use data to support the improvement of teaching.” Perceived threats in post-observation evaluative conversations (e.g., unclear expectations, a sense of disempowerment, the absence of helpful information) can impair attempts at improvement. In contrast, the authors found that feedback facilitates the improvement of teacher practice when improvement-oriented strategies (i.e., scaffolded listening strategies, a predictable format, addressing the teacher’s concerns, and the co-development of next steps) are purposefully incorporated in the feedback protocol.

We should not mistakenly assume that teacher evaluation alone will lead to the improvement of teaching.

A small and final point is the not insignificant costs associated with more “ambitious evaluation systems.” The NCTQ report appears to assume that there is an abundance of both time and money allocated to evaluation and that, consequently, there will be no trade-offs or additional real or opportunity costs associated with “ambitious” evaluation. But since time and money is not something most districts have in abundance, trade-offs are likely. Moreover, the final price tag is unknown for many states. For example, researchers priced Minnesota’s new teacher evaluation system somewhere between $80 million and $289 million. The Carnegie Foundation has developed a Cost Calculator that can help district leaders estimate both the time and financial resources involved in teacher personnel evaluation. This tool can certainly aid in enumerating the costs of evaluation, and districts will have to supplement the data generated with opportunity costs.

Teacher evaluation is certainly here to stay; there is no one who disagrees with the assertion that the demanding and important work of teaching – like that of other professions – should be evaluated. But that does not preclude caution or a healthy dose of skepticism when “connecting the dots” between teacher evaluation and consequential personnel decisions. Neither does it suggest that “connecting the dots” is a simple matter, that there are no trade-offs, or that there are no complex issues to confront. Our work at the Foundation suggests such caution is warranted. But most of all, we should not mistakenly assume that teacher evaluation alone will lead to the improvement of teaching. Our system of education would be the worse for it.

September 27, 2013

The 90-Day Cycle has emerged as an invaluable method for supporting improvement. The Handbook serves as a guide to the purpose and methods of this disciplined and structured form of inquiry.

December 4, 2013

Carnegie’s Pathways have had notable success in their first implementation. In addition to their high success rates, Rob Johnstone finds that Statway and Quantway very well may make money for an institution.